In this article:

- How the U.S. perceives China’s advancements in AI

- The coronavirus pandemic’s impact on AI and facial recognition technology

- What U.S. companies are doing in response to concerns about privacy and civil rights

- How China’s AI development is setting the stage for future advances in the technology

The coronavirus pandemic has resulted in mounting calls for heightened medical tracking in countries throughout the world, but some experts are concerned that it is also setting the stage for greater and more permanent privacy infringements powered by artificial intelligence.

Governments in countries such as Germany, Australia, Israel, the United Kingdom, Singapore, South Korea and more are experimenting with using mobile tracking systems to help chart users who have been in contact with those infected with Covid-19.

Apple and Google have also been working on similar solutions for their popular mobile operating systems, but have recently announced a ban on location tracking while still collecting user data. But experts warn that the proximity records being used for these contact tracing systems can still intrude on users’ privacy as they reveal the people users spend time with.

The level of privacy intrusion varies with each country, but China’s application is one of the most stringent, as it is mandatory and affects users’ freedom of movement.

China justifies such efforts as a necessity to contain the spread of this deadly disease, while the privacy-valuing West has expressed more hesitancy and skepticism. A presentation to a U.S. government commission has warned that China’s use of artificial intelligence (AI) systems to track citizens could lead to “draconian” consequences. Nonetheless, the U.S. could be heading in a similar direction as the coronavirus’ continued rampage becomes kindle for greater digital surveillance.

“It’s a long march to AI supremacy, and the pandemic has provided an excellent ground for the China model to thrive — surveillance from a tightly-knit, top-down governance power structure,” said T.H. Schee, an entrepreneur and tech policy consultant in Taipei who has advised the Taiwanese government on technology issues.

U.S. commission on China’s AI

A May 2019 presentation for the the National Security Commission on Artificial Intelligence (NSCAI) points to potentially “draconian” implementation of China’s AI, but later says that “mass surveillance is a killer application for deep learning” and concedes that “having streets carpeted with cameras is good infrastructure for smart cities.”

The presentation and other documents obtained by the Electronic Privacy Information Center (EPIC) were uncovered through a Freedom of Information Act lawsuit. NSCAI, chaired by former Google CEO Eric Schmidt, is tasked with carrying out reviews of the “means and methods for the United States to maintain a technological advantage in artificial intelligence, machine learning, and other associated technologies related to national security and defense.”

The NSCAI told Forkast.News that the 2019 presentation released via a FOIA request is not an official NSCAI document, but is a presentation created and provided to them by an outside, third party individual with expertise in AI.

The NSCAI has recently released two reports which detail policy recommendations for the use of AI during the coronavirus pandemic, which are separate from previous reports mentioned in this article focusing on broader strategies for the use of the technology.

“The strategic competition between the United States and China, fueled by technology developments, has not slowed down during the COVID-19 crisis; rather, it may be accelerating,” reads a report by NSCAI on mitigating the economic impacts of the Covid-19 pandemic and preserving U.S. strategic competitiveness in AI.

According to an earlier 2019 interim report by the NSCAI, “China represents the most complex strategic challenge confronting the United States because of the many co-dependencies and entanglements between the two competitors….The Chinese Community Party’s concept of ‘military-civil fusion’ has been elevated in national strategies and advanced through a range of initiatives. The distinction between civilian and military-relevant AI R&D is being eroded.”

The NSCAI interim report exhorts that any U.S. government AI systems should be consistent with, and in service of, core American values, including privacy and civil liberties.

“In societies with strong commitments to civil liberties, [AI] can make us safer,” reads the NSCAI’s 2019 interim report. “Yet it can also be employed on an industrial scale as a tool of repression and authoritarian controls, as we have seen in China and elsewhere.”

In a separate privacy and ethics recommendations report by the NSCAI on the coronavirus pandemic, a group of commissioners recommended that however AI applications are used or deployed, these applications “must ensure that American values are preserved… adopting a series of best practices and standards will limit the risks that come with reliance on new technologies for responding to Covid-19.”

The data stored for the purposes of mitigating the spread of the virus should be used, the commissioners said, “only as long as it is needed for the task before it is deleted.”

Government surveillance

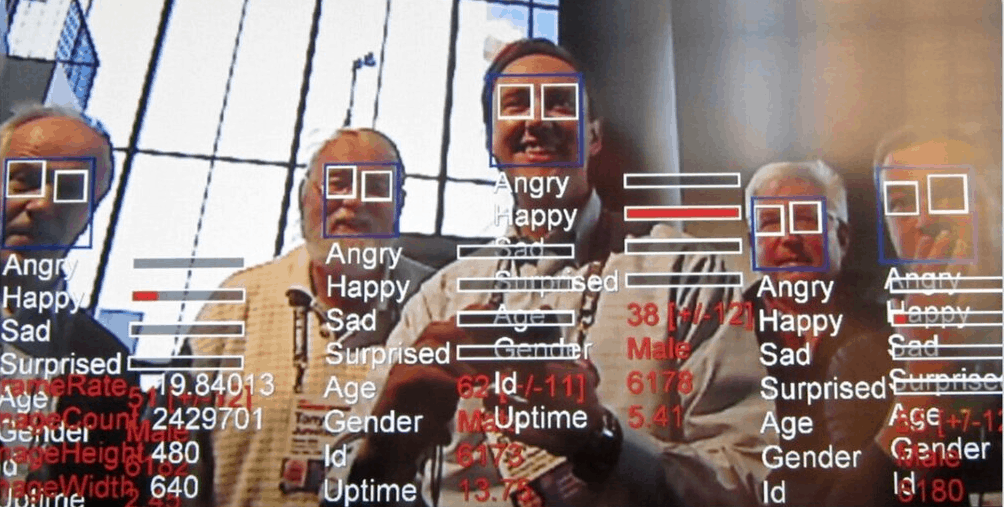

Government efforts to manage public health crises have historically resulted in heightened surveillance measures even when there were limitations to the technology. Contact tracing has been used to fight against numerous diseases such as the 1918-19 Spanish Flu pandemic, SARS and AIDS. But with new technologies like AI able to identify and track individuals better than ever before, many in the West are asking, how much is too much?

Despite U.S. government concerns about China’s influence and AI’s capabilities for citizen surveillance, like it or not, China’s AI technology is already becoming an increasing part of everyday life in the West.

China’s AI industry is experiencing huge growth internationally — including in the U.S. A 2019 report by the Carnegie Endowment for International Peace found over 60 countries already importing and using various forms of China’s AI surveillance technology, including facial recognition software.

Some in the U.S. private sector have taken a stand — and sought to distance themselves from their own government. For example, Google announced it would not renew its contract with the U.S. military for its Project Maven, which sought to improve AI capabilities for surveillance and targeting on the battlefield, after company employees protested.

After the police choking death of George Floyd in May this year, U.S. lawmakers as well as large companies also increased their scrutiny of AI and facial recognition technology.

Research has shown that AI usage in industries such as healthcare can be inaccurate and highly discriminatory when applied to African Americans. The New York Times also recently reported that a man in Michigan was wrongfully accused of a crime through faulty facial recognition.

In June, the Facial Recognition and Biometric Technology Moratorium Act was proposed by Democratic lawmakers to prohibit the use of biometric surveillance by the federal government.

The Senate bill would make it “unlawful for any Federal agency or Federal official, in an official capacity, to acquire, possess, access, or use in the United States — any biometric surveillance system; or information derived from a biometric surveillance system operated by another entity.”

Sen. Ed Markey of Massachusetts, the bill’s co-sponsor, said in a statement: “Facial recognition technology doesn’t just pose a grave threat to our privacy; it physically endangers Black Americans and other minority populations in our country.”

Large companies in the U.S. are now also coming under pressure to change. IBM said in a recent letter to Congress that it would stop working on facial recognition technology in order to advance racial equality. Microsoft soon followed suit, stating that it would not sell its facial recognition system to police departments while regulations for this technology are not updated. Amazon has also announced a one-year moratorium to police use of its facial recognition product.

In contrast, companies in China are accustomed to following government dictates and actively supporting the priorities of central authorities.

“Tencent and Alibaba executives have proudly voiced public support for their collaboration with the government around national security initiatives,” reads the presentation for NSCAI. “Outwardly embracing this level of public private cooperation serves as a stark contrast to the controversy around Silicon Valley selling to the U.S. government.”

According to the NSCAI, China has deployed AI to advance an autocratic agenda and to commit human rights violations, setting an example that other authoritarian regimes will be quick to adopt and that will be increasingly difficult to counteract.

China’s developments

China and the U.S. have been scaling up their capabilities in AI long before the coronavirus infected patient zero. Since the start of its 13th Five-Year Plan in 2016, China has been focused on investing in and supporting the growth of a variety of emerging tech industries, including blockchain and AI. According to state-run news organization Xinhua, at least 20 applications based on blockchain were launched to tackle the coronavirus challenge earlier this year.

See related article: How healthcare uses blockchain to wage a smarter war against the coronavirus pandemic

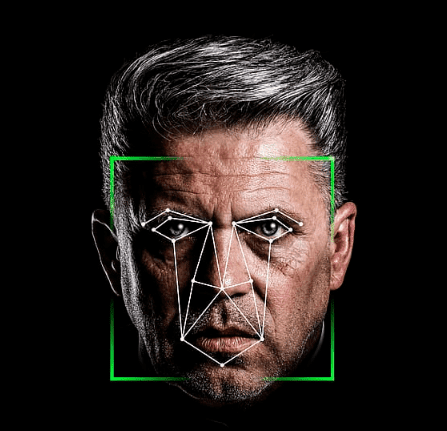

Diego Xue, founder of Beijing-based Horizon Technology, which uses machine learning algorithms in combination with augmented reality, said that soon in China, people taking a bus, train, plane, or even simply walking down the street will not be able to avoid being scanned by cameras with facial recognition.

The technology has already been used in China to successfully apprehend several fugitives who appeared in public places or in crowds of thousands of people. Recently, a murder suspect who had been a fugitive for the past 24 years gave up when he realized he could not escape being found out by China’s new coronavirus contact tracing app.

Unable to work or shop since he could not provide his health status to the tracing app without being identified, he turned himself in to the police.

Xue believes such applications for AI and machine learning systems would have a positive impact on society. But in China, where freedom of expression is more tightly regulated than most other parts of the world, such technologies could also be applied to crack down on information and opinions that the government does not like.

“In this case AI and machine learning can be used, [for example, through] speech recognition, to capture and punish those who are opinionated against the regime,” Xue told Forkast.News. “To the Western world, this is called repression.”

Hong Kong-based SenseTime, which is worth an estimated USD $4.5 billion and is the world’s most valuable AI startup, has had a prominent role in China’s development of facial recognition technology. According to the company, it has provided “smart city” solutions to about 100 cities in China.

In 2019, SenseTime sold a 51% stake in a joint venture that was supplying Chinese police in Xinjiang Province with the company’s facial recognition capabilities.

Franky Chan, a spokesperson for SenseTime who has since left the company, told Forkast.News that AI goes beyond just facial recognition.

“Amongst their many potential applications, they can improve public safety, improve disease diagnosis, empower self-driving cars and many more,” Chan said. “We believe that AI can be a force for good for both our society and humanity, and we are committed to a responsible usage of AI technologies.”

The Chinese Government has received criticism from a variety of international organizations and human rights groups such as Amnesty International for allegedly building extra-legal internment camps to hold an estimated 1.5 million Uyghur Muslims deemed a threat to national security in Xinjiang Province. Facial recognition systems linked to a sophisticated surveillance network including CCTV and specialized apps have been used extensively in the region to assist with government crackdowns, according to some reports.

In 2019, the Beijing Academy of Artificial Intelligence, an organization backed by the Chinese Ministry of Science and Technology launched the “Beijing AI Principles,” a set of guidelines stipulating that the “human privacy, dignity, freedom, autonomy, and rights should be sufficiently respected” in the development of AI.

U.S. worries about tech big brother

In the meanwhile, civil rights and privacy advocates in the U.S. are sounding alarms about the use of facial recognition technology in the West.

“If unleashed, face surveillance would suppress civic engagement, compound discriminatory policing, and fundamentally change how we exist in public spaces,” wrote Matt Cagle of the American Civil Liberties Union and Brian Hofer, chair of Oakland’s Privacy Advisory Commission, in an op-ed following San Francisco’s ban of facial recognition technology in 2019.

Cagle, Hofer and others contend that facial recognition technology is not compatible with a healthy democracy. Others believe the technology should not be banned outright, but closely regulated.

But experts in national security say facial recognition and other AI technology have a valid application when it comes to military environments.

Image recognition, which is an important element of facial recognition technology, can save lives by using drones rather than sending out humans to surveil areas and identify potential adversaries.

In 2017, the Trump administration issued an executive order expediting the creation of a system to capture the biometric information of individuals to “protect the Nation from terrorist activities by foreign nationals admitted to the United States.”

The U.S. Customs and Border Protection is already using facial recognition technology in points of entry such as airports and seaports, and in 2017 made “considerable progress” developing and applying those systems to track passengers.

According to a report by Homeland Security’s Office of Inspector General in 2018, the end goal of the program is to “biometrically confirm the departures of 97 to 100 percent of all foreign visitors processed through the major U.S. airports.”

Some believe that the data is going to be valuable in applications like securing the border, as well as, of course, monitoring those infected by the coronavirus. Russia and China, for example are using facial recognition to check for elevated temperatures in crowds and to identify individuals who aren’t wearing masks.

But who ultimately controls the technology and keeps the data collected? In 2019, hackers breached systems linked to the U.S. Customs and Border Protection agency and stole images of vehicle license plates and information on travelers at border crossings, adding to doubts regarding the security of image recognition technologies.

Beyond facial recognition

Using AI to identify and track people in crowds or at airports isn’t the only way it could be used to undermine people’s autonomy.

“It is very likely that the U.S. will attempt to emulate aspects of China’s artificial intelligence monitoring systems when it comes to border security and counter-insurgency,” Darren Byler, lecturer of sociocultural anthropology at the University of Washington, told Forkast.News.

Byler, who writes about “terror capitalism” and how it is practiced in China’s Xinjiang province to control Uyghur Muslims, said that the rule of law, and rights to privacy and free speech in the U.S. ostensibly prevents American security agencies from implementing some of the most abusive aspects surveillance seen in the Chinese context.

“Some of these activities promote forms of ethno-racial inequality and exclusion, but they are not purpose-built to do this as they are in Xinjiang,” Byler said.

Not all AI or machine learning applications carry the risk of negative consequences though. At the University of Hong Kong, Benjamin C.M. Kao — a professor in the department of computer science — leads a team using machine learning to assist a growing field in the financial services industry known as “RegTech,” short for regulatory technology.

“Artificial intelligence is a very powerful tool, it has applications in almost every sector. So in my opinion, whoever masters it well will get the real upper hand in most things,” Kao told Forkast.News, citing AI’s many positive uses in finance, investigating regulatory violations and even investigative journalism.

Technology research firm IDC issued a report last March projecting that global spending on AI systems are estimated to reach US $79.2 billion by 2022.

As the race between the U.S. and China to maintain dominance in the AI field develops over time, it is also important to consider how younger generations are adapting to the trend and what impact their actions could have in the coming years.

According to Kao, the current generation of the students in his department from China will become leaders in society, and will exert a large influence by applying their knowledge of AI.

“I would say that in 10 to 20 years down the road, when they mature and can be leaders in different sectors, they would be introducing more AI elements, so that’s going to be an AI revolution.”

But others warn that the coronavirus pandemic could trigger a shift toward greater repressive use of the technology over time.

“Facial recognition technology is a menace disguised as a gift,” wrote Woodrow Hartzog, professor of law and computer science at Northeastern University, in an essay co-authored with Evan Selinger. “It’s an irresistible tool for oppression that’s perfectly suited for governments to display unprecedented authoritarian control and an all-out privacy-eviscerating machine.”